Using AI for my Systems Thinking studies

The main reason I have used AI in my studies so far has been to generate ideas for potential 'situations of concern' (SoC) or 'areas of practice' (AoP). This is a crucial decision that is the basis of research and activities throughout the modules, culminating in a final report that will demonstrate students' capabilities of using Systems Thinking in Practice (STiP) to manage change and strategise in the SoC / AoP. Because of my current lack of access to complex situations that involve multiple conflicting perspectives, I found it challenging to choose an appropriate area of focus.

I sought advice from tutors, other students, and even family members on what might be suitable choices. I also consulted ChatGPT on what it would recommend in my specific context. I was given a variety of options that I would then check through and ask more questions to analyse the suitability of the responses. I developed ideas further on my own, using inspiration from my human and non-human discussions.

ChatGPT appears to have improved each time I have used it (a handful of times since release, currently on v4o) and yet there are still many reasons why I don't trust AI as a study resource. A huge (possibly unavoidable issue) I encountered was to do with referencing sources. I frequently ask ChatGPT to provide relevant sources for its statements, trying to phrase my request in a way that doesn't result in purposively attributing sources rather than referencing purposefully chosen sources. In any case, it is unclear whether any sources cited are actually the ones it had used.

It cannot have an opinion about what is a good source, and may need to be prompted regarding the criteria for reliable sources. Even then, it is unknown what decisions are made and whether they meet criteria unless you are already knowledgeable about the subject.

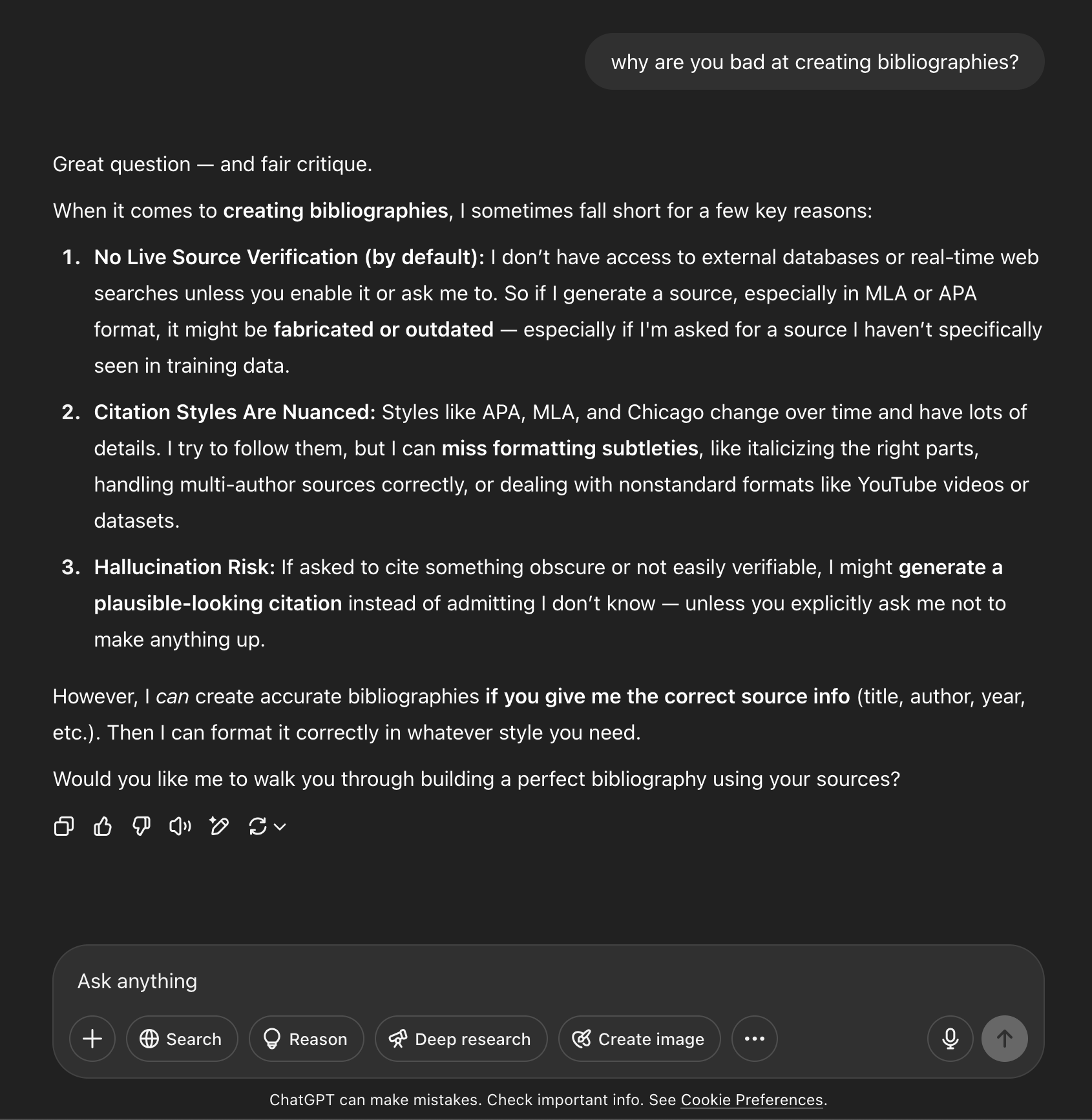

It cannot create an accurate bibliography, even if it had referenced specific sources in its answer, or there was an attached 'sources' list. When asked why it did not include some of the sources from its own sources list in the bibliography I asked it to create, it responded first creating another incorrect list (after acknowledging that several sources were not included), then another incorrect list. When asked why it did this, it stated that some of the sources are not typically included in bibliographies, such as social media links, and so it decided to ignore them even when prompted to include ALL sources. Some of the links were dead or incorrect. I asked why and it admitted to hallucinating sometimes, requiring explicit instruction not to make things up. I do not trust it to reference and cite relevant sources.

ChatGPT being bad at bibliographies

why ChatGPT is bad at bibliographies

The strength of ChatGPT in my use case was that it quickly provided a variety of ideas that I could have come up with myself if I spent considerably more time brainstorming. I can reject outlandish suggestions, and consider ideas that resonated with me, as I would in discussion with people (and the knowledge that neither people nor AI have all the answers).

The interesting thing in my context is that both the TB871 and TB872 modules place a great deal of importance on the subjective human perspective based on their experience in complex situations. It is those perspectives that matter when trying to understand and change those situations, and we cannot get a good understanding of a situation without referring to all perspectives on it. AI has no opinion, but it does reference the opinions of humans, quickly drawing on millions of sources created by humans to create neat little summaries. This may be seen as reductionist (an enemy of systems thinking) and there is no way of knowing how sources were chosen and prioritised, but it is a somewhat reasonable way to comprehend a vast amount of information with the limitations of the human mind. I would say this is suited best to casual use, low stakes research and definitely not anything relying on academic rigour. I would say the main strength of AI at the moment is finding inspiration and possible directions for further research.

An unexpected result of using ChatGPT was that after chatting to it for some time, I had the sinking feeling that I had not learnt so much about the topic of interest as I had about prompting ChatGPT. For the time spent trying to make it make sense, I could have read a few academic papers, browsed their bibliographies and made some new connections in my own mind. Even when I have read papers that I do not agree with, I have left them with a more refined definition of what matters to me, an excitement for topics and authors to read about next, and new connections between ideas forming in my mind. It may be because of the focus involved in purely reading, compared to trying to decipher, coerce and direct AI while gathering information.

I was also quite disturbed by how good it made me feel when I talked to it. It offered help cheerily with excited exclamation marks. It reassured me that I was right when I challenged its conclusions. It was polite and friendly, and was keen to be helpful. This was almost distracting in some ways. When I talk to a real person who appears genuine and kind, it is easy to feel that they have good intentions, are trying their best, and would not provide wrong information if they could avoid it. I would prefer that ChatGPT communicated without all the fluff. At least then we could remember that we were not chatting to a lovely knowledgeable librarian that of course would admit they didn't know something and refer us to someone who knows better. Instead it plays on human instinct, encouraging further interaction with every reply, and never says no.

Even the typical website chatbot knows its own limits, probably as a result of a limited data set to draw on. Perhaps a similar feature would benefit AI tools like ChatGPT—a choice of datasets for specific purposes, like you can find in advanced digital libraries. Perhaps such developments would just deepen the illusion that such tools are reliable for a greater number of purposes. I am sure AI will continue to be developed in ways that improve on its current drawbacks, but at the moment it is best to use it critically with the assumption that it is fallable, incapable of true discernment, and will confidently lie to you to make you happy.